[ad_1]

In 1962 something unusual happened at Bell Labs in Murray Hill, New Jersey. After a long day tending the room-size IBM 7090, an engineer named A. Michael Noll rushed down the hall with a printout in hand. The machine’s plotter had spit out an arrangement of lines. The abstract design on the sheet could have passed for a work in the print section at a museum of modern art. Noll had decided to explore the machine’s creative potential by having it make randomized patterns. Noll’s breakthrough would eventually be called “computer art” by both fellow programmers and cultural historians. His memo announcing the creation to the Bell Labs staff, however, was more measured: “Rather than risk an unintentional debate at this time on whether the computer-produced designs are truly art or not,” he wrote, “the results of the machine’s endeavors will simply be called ‘Patterns.’”¹

The idea that machines can mimic certain aspects of human reasoning—thus becoming “artificially intelligent”—stretches back to the birth of information theory in the 1940s. But for decades after the first experiments in digital “thinking,” little to no progress was made. Prognostications about a cybernetic future stalled and funding dried up, in what researchers referred to as an “AI winter.” But recently, optimism has returned to the field. In the past several years, machine learning algorithms—powered by a deluge of images from social media—have advanced enough that artificial neural networks produce images that some say exhibit creativity in their own right.

AI was already capable of automating rote, mechanical tasks. But its newfound ability to generate images has inspired a resurgence in the debate about machine creativity. It also prompts the question of what art critics can say about work made by computers. Criticism plays a key function in the humanist tradition as a mediator between institutions and the public. Not only do critics tie cultural production to its historical and socioeconomic context, but they also highlights art’s role in cultivating the democratic values of empathy and egalitarianism. Three recent books offer possible paths for criticism that addresses creative AI. But all of them have serious shortcomings.

Courtesy Los Angeles County Museum of Art.

Noll’s “patterns” are the earliest examples cited by Arthur I. Miller in his book The Artist in the Machine: The World of AI-Powered Creativity (MIT Press, 2019). A historian of science, Miller grounds his writing on AI in his longtime interest in creativity and genius. “The world of intellect is not a level playing field,” he writes. “No matter how diligently we paint, practice music, ponder science, or write literature, we will never be Picasso, Bach, Einstein, or Shakespeare.” Miller begins from the premise that the brain is, like the computer, an information processor. Computers and artists alike ingest data, apply rules, and adapt them to create something novel. The genius brain makes connections better and faster than the average one, like a computer with more processing power. If creativity is, as Miller defines it, the production of new knowledge from already existing knowledge, then the computer may someday have the capacity to match and exceed the human brain.

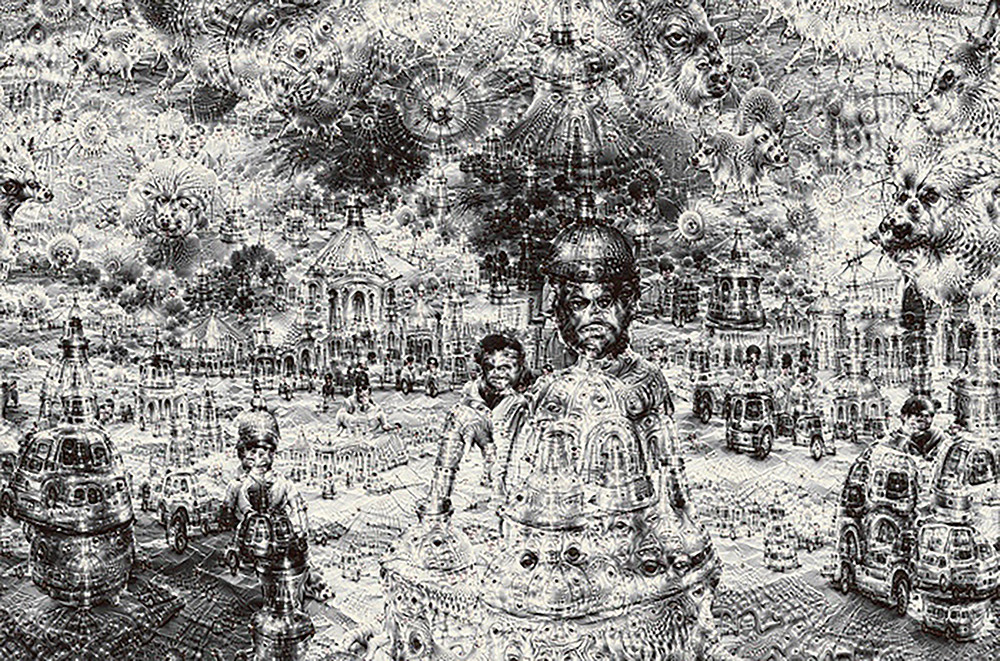

Miller’s book pays special attention to Alexander Mordvintsev’s DeepDream, a 2015 breakthrough for machine creativity. While working for Google as a computer vision engineer, Mordvintsev interrupted a deep neural network trained on images of dogs and cats. His intervention rendered a momentary visual trace of the network’s attempt to spot patterns in data. The result was an image of a technicolored chimera, half-dog and half-cat, that spliced together parts of the reference image (a kitten and a beagle perched on adjacent tree stumps) with thousands of other images of similar subjects. It was dubbed “Nightmare Beast” after it went viral on the internet.

Miller discusses a host of other engineer-artists who have helped advance the growing phenomenon of art made with neural networks. In Portraits of Imaginary People (2017), Michael Tyka drew upon thousands of portrait photos from Flickr to create images of people who never existed. Leon Gatys’s style-transfer work employs a neural network to make found images resemble paintings by Rembrandt, Cézanne, and Van Gogh. Miller doesn’t fully explain how these images exhibit creativity. The computer makes decisions about colors and brushstrokes, but its transformations are more of a novelty act than the creation of original work.

Almost all of Miller’s triumphant examples employ unsupervised learning, in which a neural network generates images by itself. A more specific subset of this process used by artists is known as a generative adversarial network (GAN), which involves two dueling neural networks: the discriminator, which is trained on real images, and the generator, which scrambles pixels to make completely new images. The generator sends each image it makes to the discriminator, which judges whether or not the image is made by the generator.

GAN art engages only in combinatorial creativity, meaning it synthesizes existing image data to make something slightly new, based on its imperfect ability to correlate an image to assigned metadata. Mordvintsev fed a neural network images of cats and dogs, then interrupted it midstream to watch it try to build a cat or a dog itself from scraps. The GAN is cognitively inferior to a newborn child. It needs to be fed source material specifically and carefully. One of AI-art criticism’s first tasks will be to show the public that supposedly “smart” technologies are, in practice, quite dumb. Just because GANs employ unsupervised learning—meaning there is no predefined output—does not make them creative.

Artist Casey Reas details the many steps involved in the production of GAN art in Making Pictures with Generative Adversarial Networks (Anteism Books, 2020). One of the developers of the generative art software Processing, Reas provides a text that is part “how to,” part reflection on the aesthetic capacities of the method. It is the best account so far of how a critic might engage with the end product of something on the order of DeepDream.

To start, Reas is adamant that with each step in the highly complex process of working with GANs, the artist makes critical interventions. First, one must select and upload a large set of images. Reas compares this process to a photographer’s choosing subjects, staging, and equipment. Second, one makes adjustments to interrupt or influence the neural network’s output. These choices are often speculative, and the end result is always a matter of testing and learning. Reas describes it as coaxing images from the “latent space.” No one is sure exactly how the networks’ layers learn, and thus, no one is quite sure why the networks modify images the way they do.

Courtesy the artist.

Reas gives a sober assessment of the role of the artist vis-à-vis these nascent tools. He is neither utopian about superhuman powers nor dismissive of AI-based creative output, as limited and contrived as it may be. Instead, he lays out the points of entry for criticism. Anywhere the artist directly intervenes, the critic may follow. A critic writing about DeepDream could, for example, point to the narrowness of Mordvintsev’s selection of images of cats and dogs, and how the end product suffered as a result. Or the critic could comb through the various versions produced by the neural network and judge the relative merits of each composition. Both of these approaches treat the artist-engineer as a curator, as someone whose primary work is the selection of images. This is because the true moment of machine creativity is illegible to humans. If it weren’t, then there would be nothing artificial about its intelligence.

Courtesy the artist.

Proponents of AI art are quick to defend the new genre with comparisons to photography. “Generating an image with a GAN can be thought of as the start of another process, in the same way that capturing an image with a camera is often only one step in the larger system necessary for making a picture,” writes Reas. When artists use a camera, it is clear what they intend to create. Accidents happen, to be sure. But no previous creative process has ceded more control to mathematics than working with AI.

Courtesy the artist.

Media theorist Lev Manovich goes beyond artistic use of machine learning, taking a broader view of AI’s impact on culture. In AI Aesthetics (Strelka, 2018), he focuses on the AI built into apps like Instagram—the commercialized creative AI that he says could influence “the imaginations of billions.” Manovich usefully explores the negative implications of the “gradual automation (semi or full) of aesthetic decisions.” This leads him to touch eventually on a possible future in which AI becomes a kind of cultural theorist. Manovich cites a common case: a computer that is fed multiple examples of artworks can use machine learning to detect basic attributes that belong to certain styles and genres. After this, the system can accurately identify the style or genre of a new image fed into the network. While Manovich suggests that this is, on the surface, one of the functions of a cultural critic or historian, he also points out its limitations. The GAN that identifies a style or genre is just as much a black box as the GAN that produces new art. While the “cultural analytics” that Manovich predicts may empower machine learning to do the descriptive work of historiography, telling us the what, where, who, and when on a scale we have never before experienced, it is unable to access the critic’s ability to tell us about the how and why. As these semi-automated aesthetic categorizations become more common, there’s a risk of impoverishing critical inquiry by erasing human reference points.

The current discourse on art and AI offers two ways to situate the artist’s agency. Miller represents the computationalist school of thought, where all the faculties of human genius will, in due time, be usurped by the powers of machine learning. Reas, however, places the human artist at the center, explaining how AI is actually a set of discrete tools used by the artist. Most people working with these new tools fall somewhere in between, as evidenced by Manovich’s hopes for a branch of cultural analytics that combines the data scientist’s precision with the humanist’s theoretical analysis. But there is little doubt that with the introduction of these machine learning abilities—whether they be the superintelligent computers of Miller’s fantasy or the extensions of the artist’s tool kit described by Reas—the critical observer will need a new vocabulary. The math behind all these tools challenges the critic’s understanding and application of context, meaning, intent, and influence. The more advanced the GANs become, the less there is to say.

But there could be a more expansive and generative future for critique. There is a growing movement in Silicon Valley to integrate neural network capabilities into all parts of life, from loan application assessment to predictive policing. The designs for this future are being implemented by massive technology platforms that already act like states: mapping the world, working on defense projects, impacting the outcome of elections. Against this background, the computationalist notion that the human brain is a suboptimal computer has enormous political and ethical weight. The critical discourse around art and AI takes on urgent political significance. Critics cannot simply ignore AI creativity as they might have ignored momentary fads in the past. Art institutions are now eagerly partnering with corporations that conduct research in machine learning, seeing them as new sources of funding and audience engagement. Artists today can employ the same tools used to drive autonomous vehicles, surveil and track immigrants, or calculate the probability that a person will commit a crime. In this rapidly changing climate, criticism of art made with AI has a responsibility to engage in a structural analysis that identifies the position of GANs in the system of power relations known as platform criticism.

Seeing, Naming, Knowing (Brooklyn Rail, 2019), by critic and curator Nora N. Khan, is the strongest example of criticism that addresses this new algorithmic regime. Khan takes law enforcement’s deployment of racially biased automated surveillance tools as a starting point. She then discusses the work of Trevor Paglen, Ian Cheng, and Sondra Perry, identifying automated image production as a profound transition from passive to active camera vision. Her analysis reveals a drive toward a machine-enabled omniscience. Large-scale machine learning marches inexorably toward optimization, always seeking more data in an attempt to smooth out mistakes in computational judgment. Worst of all, these highly orchestrated efforts present themselves as neutral, objective, and impervious to critique. “Seeing is always an ethical act,” Khan argues. “We have a deep responsibility for understanding how our interpretation of information before us, physical or digital, produces the world.”

AI creativity, however tenuous, is an early indication that art has now become inextricable from the same platforms that run the information economy and all its newly potent political apparatuses. Criticism of AI art must acknowledge this reality, and explore how it shapes the art of our time.

1 A. Michael Noll, “Patterns by 7090,” Bell Labs Inc. memo, Aug. 28, 1962, noll.uscannenberg.org.

This article appears under the title “Critical Winter” in the April 2020 issue, pp. 26–29.

[ad_2]

Source link